Google,Gulab 69 (2025) Hindi Web Series OpenAI, DeepSeek, et al. are nowhere near achieving AGI (Artificial General Intelligence), according to a new benchmark.

The Arc Prize Foundation, a nonprofit that measures AGI progress, has a new benchmark that is stumping the leading AI models. The test, called ARC-AGI-2 is the second edition ARC-AGI benchmark that tests models on general intelligence by challenging them to solve visual puzzles using pattern recognition, context clues, and reasoning.

This Tweet is currently unavailable. It might be loading or has been removed.

According to the ARC-AGI leaderboard, OpenAI's most advanced model o3-low scored 4 percent. Google's Gemini 2.0 Flash and DeepSeek R1 both scored 1.3 percent. Anthropic's most advanced model, Claude 3.7 with an 8K token limit (which refers to the amount of tokens used to process an answer) scored 0.9 percent.

The question of how and when AGI will be achieved remains as heated as ever, with various factions bickering about the timeline or whether it's even possible. Anthropic CEO Dario Amodei said it could take as little as two to three years, and OpenAI CEO Sam Altman said "it's achievable with current hardware." But experts like Gary Marcus and Yann LeCun say the technology isn't there yet and it doesn't take an expert to see how fueling AGI hype is advantageous to AI companies seeking major investments.

The ARC-AGI benchmark is designed to challenge AI models beyond specialized intelligence by avoiding the memorization trap — spewing out PhD-level responses without an understanding of what it means. Instead it focuses on puzzles that are relatively easy for humans to solve because of our innate ability to take in new information and make inferences, thus revealing gaps that can't be resolved by simply feeding AI models more data.

"Intelligence requires the ability to generalize from limited experience and apply knowledge in new, unexpected situations. AI systems are already superhuman in many specific domains (e.g., playing Go and image recognition)" read the announcement.

SEE ALSO: I compared Sesame to ChatGPT voice mode and I'm unnerved"However, these are narrow, specialized capabilities. The 'human-ai gap' reveals what's missing for general intelligence - highly efficiently acquiring new skills."

To get a sense of AI models' current limitations, you can take the ARC-AGI test for yourself. And you might be surprised by its simplicity. There's some critical thinking involved, but the ARC-AGI test wouldn't be out of place next to the New York Timescrossword puzzle, Wordle, or any of the other popular brain teasers. It's challenging but not impossible and the answer is there in the puzzle's logic, which is something the human brain has evolved to interpret.

OpenAI's o3-low model scored 75.7 percent on the first edition of ARC-AGI. By comparison, its 4 percent score on the second edition shows how difficult the test is, but also how there's a lot more work to be done with reaching human level intelligence.

Topics Google OpenAI

(Editor: {typename type="name"/})

U.N. aims to make carbon emissions cost money at COP 25 climate talks

U.N. aims to make carbon emissions cost money at COP 25 climate talks

Arthur Miller on The Crucible by Sadie Stein

Arthur Miller on The Crucible by Sadie Stein

Reddit CEO's AMA over third

Reddit CEO's AMA over third

“Things Grown

“Things Grown

The Amazon Book Sale is coming April 23 through 28

The Amazon Book Sale is coming April 23 through 28

Van Jones' moving reaction to Biden's election win says it all

Nailed it.In the breathless moments after major networks called the 2020 election for presumed Presi

...[Details]

Nailed it.In the breathless moments after major networks called the 2020 election for presumed Presi

...[Details]

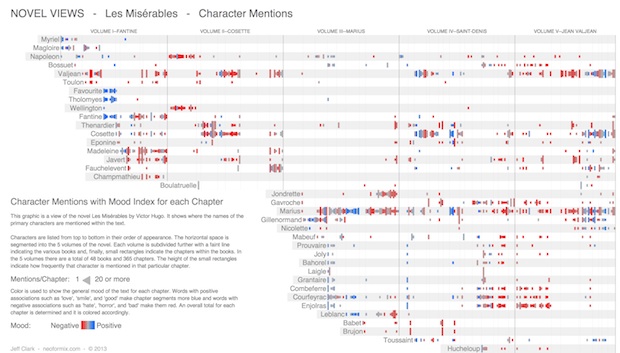

The Characters of Les Misérables are Sad by Sadie Stein

The Characters of Les Misérablesare SadBy Sadie SteinJanuary 18, 2013Arts & CultureHere is a moo

...[Details]

The Characters of Les Misérablesare SadBy Sadie SteinJanuary 18, 2013Arts & CultureHere is a moo

...[Details]

Robinhood delists 3 crypto tokens, Binance suspends U.S. dollar deposits and withdrawals

Just days after the U.S. Securities and Exchange Commission (SEC) sued crypto exchanges Binance and

...[Details]

Just days after the U.S. Securities and Exchange Commission (SEC) sued crypto exchanges Binance and

...[Details]

Skywatching is lit in May, says NASA

Here's a wholesome quarantine activity: For the rest of May you can view bright objects in our solar

...[Details]

Here's a wholesome quarantine activity: For the rest of May you can view bright objects in our solar

...[Details]

How to be a Bureaucrat, and Other News by Sadie Stein

How to be a Bureaucrat, and Other NewsBy Sadie SteinJanuary 11, 2013On the ShelfHow to query an agen

...[Details]

How to be a Bureaucrat, and Other NewsBy Sadie SteinJanuary 11, 2013On the ShelfHow to query an agen

...[Details]

Revel YellBy Sadie SteinApril 12, 2012The RevelWhen people hear that one works at The Paris Review,

...[Details]

Revel YellBy Sadie SteinApril 12, 2012The RevelWhen people hear that one works at The Paris Review,

...[Details]

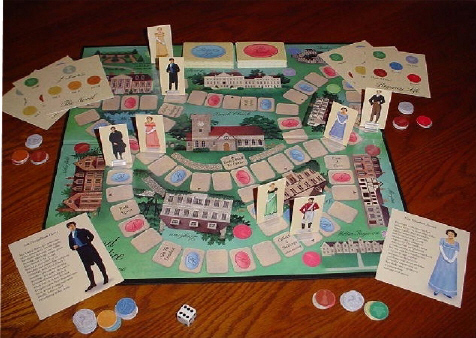

A Truth Universally Acknowledged by Sadie Stein

A Truth Universally AcknowledgedBy Sadie SteinJanuary 14, 2013Arts & CultureIn honor of the two

...[Details]

A Truth Universally AcknowledgedBy Sadie SteinJanuary 14, 2013Arts & CultureIn honor of the two

...[Details]

Alienated

...[Details]

Alienated

...[Details]

The Beau Monde of Mrs. Bridge by Evan S. Connell

The Beau Monde of Mrs. BridgeBy Evan S. ConnellJanuary 11, 2013FictionOur great contributor Evan Con

...[Details]

The Beau Monde of Mrs. BridgeBy Evan S. ConnellJanuary 11, 2013FictionOur great contributor Evan Con

...[Details]

接受PR>=1、BR>=1,流量相当,内容相关类链接。